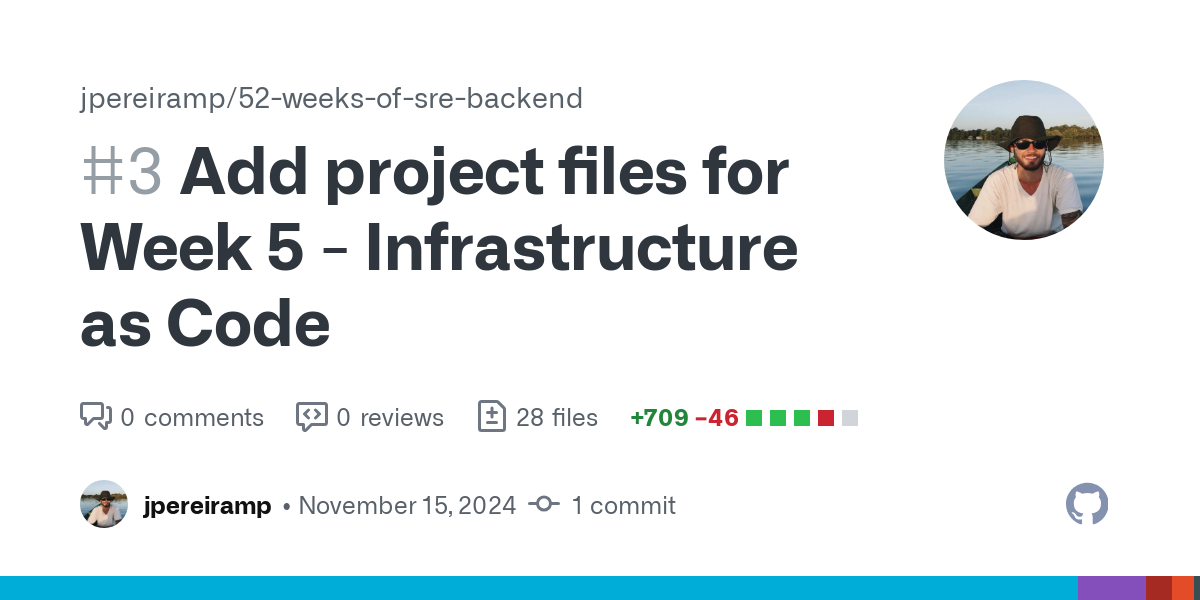

In our previous post on Infrastructure as Code, we covered the theoretical aspects of IaC, including its benefits, common tooling, and best-practices. Now, let's put that knowledge into practice by building and deploying our application's infrastructure, along with a managed database and complete monitoring stack. All deployed and managed by DigitalOcean.

Introduction

This guide builds upon our existing repository used throughout the "52 Weeks of SRE" blog series, which is based on the excellent go-base template. We'll work on enhancing this service by defining its required infrastructure as code, using industry-standard tools Terraform and Ansible.

Prerequisites

- Terraform

- Ansible

- Understand the basics of Infrastructure as Code

- A DigitalOcean account

I'll use DigitalOcean to deploy and manage our applications throughout this guide.

As always, I'll be working on top of the "52 Weeks of SRE" Repository.

Throughout this article we'll be deploying the following infrastructure:

- 1 Droplet for hosting our Golang App

- 1 Droplet for hosting our monitoring stack (Grafana & Prometheus)

- 1 Managed PostgreSQL Database

- 1 Load Balancer for handling traffic to the Golang App

- Firewall rules for proper security

We'll first work on defining these infrastructure pieces with Terraform. Then, we'll work on automatically configuring them with Ansible, so that we can get our apps deployed, our Grafana dashboards configured, our Prometheus alert rules setup, and so on...

Terraform's Core Concepts

Let's first go through some of Terraform's basics, the we'll move on to building our infrastructure as code. I highly suggest you checkout Terraform's Official Documentation to deepen your knowledge on all of its features.

1. Configuration Files

main.tf: The primary configuration file containing resource definitions- Should focus on resource creation and relationships

- Keep it organized by logical components (networking, compute, storage)

- Use consistent naming conventions for resources

variables.tf: Variable declarations for configuration flexibility- Define input variables with clear descriptions

- Include validation rules for variable values

- Specify type constraints (string, number, list, map)

- Use sensitive = true for confidential variables

outputs.tf: Defines values to expose after apply- Useful for passing information between modules

- Essential for integration with other tools

- Can be used for documentation purpose

versions.tf: Version constraints for Terraform and providers. Example:

terraform {

required_version = ">= 1.0.0"

required_providers {

digitalocean = {

source = "digitalocean/digitalocean"

version = "~> 2.0"

}

}

}

2. Understanding Terraform Providers

Terraform providers are plugins that enable Terraform to interact with cloud providers, SaaS providers, and other APIs. They serve as the bridge between Terraform and external services, translating Terraform configurations into API calls to create and manage resources.

Key Concepts

- Provider Configuration: Each provider needs to be configured with the necessary credentials and connection details

- Provider Resources: Providers expose specific resources that can be created and managed

- Provider Data Sources: Read-only data that can be queried from the provider

Example provider configuration:

# DigitalOcean provider

provider "digitalocean" {

token = var.do_token

}

# AWS Provider

provider "aws" {

region = "us-west-2"

access_key = var.aws_access_key

secret_key = var.aws_secret_key

}

# Azure Provider

provider "azurerm" {

features {}

subscription_id = var.subscription_id

tenant_id = var.tenant_id

}

Provider Registry

Terraform providers are distributed via the Terraform Registry, which serves as the main directory for publicly available providers. The registry includes:

- Official providers maintained by HashiCorp

- Partner providers maintained by technology companies

- Community providers maintained by individual contributors

Provider Documentation

For detailed information about specific providers, consult:

- Provider Documentation - Official documentation for all HashiCorp-maintained providers

- Writing Custom Providers - Guide for developing custom providers

- Provider Development Program - Information about HashiCorp's provider development program

In our example implementation using DigitalOcean, you can find the complete provider documentation at DigitalOcean Provider.

3. State Management Best Practices

- Use remote state storage (e.g. HCP Terraform, AWS S3, Azure Storage)

- Enable state locking to prevent concurrent modifications

- Implement state backup strategies

Example backend configuration using S3:

terraform {

backend "s3" {

bucket = "terraform-state-bucket"

key = "project/terraform.tfstate"

region = "us-east-1"

dynamodb_table = "terraform-lock-table"

encrypt = true

}

}

4. Module Organization

Modules should be organized by functionality:

modules/

├── networking/

│ ├── main.tf

│ ├── variables.tf

│ └── outputs.tf

├── compute/

│ ├── main.tf

│ ├── variables.tf

│ └── outputs.tf

└── database/

├── main.tf

├── variables.tf

└── outputs.tfTerraform Implementation

The following Terraform configuration provisions the infrastructure in DigitalOcean, including droplets for Docker, App, and Monitoring services, along with a managed PostgreSQL database.

We'll work inside a new folder, terraform under our repository "52 Weeks of SRE". Inside this folder, let's start implementing our infrastructure with Terraform.

terraform/main.tf

terraform {

required_providers {

digitalocean = {

source = "digitalocean/digitalocean"

version = "~> 2.0"

}

}

}

provider "digitalocean" {

token = var.do_token

}

# VPC for network isolation

resource "digitalocean_vpc" "app_network" {

name = "app-network-${var.environment}"

region = var.region

}

# Application Droplet

resource "digitalocean_droplet" "app_host" {

name = "app-host-${var.environment}"

size = var.app_droplet_size

image = "ubuntu-22-04-x64"

region = var.region

vpc_uuid = digitalocean_vpc.app_network.id

ssh_keys = [var.ssh_key_fingerprint]

tags = ["app-host", var.environment]

lifecycle {

create_before_destroy = true

prevent_destroy = false

}

}

# Monitoring Droplet

resource "digitalocean_droplet" "monitoring_host" {

name = "monitoring-host-${var.environment}"

size = var.monitoring_droplet_size

image = "ubuntu-22-04-x64"

region = var.region

vpc_uuid = digitalocean_vpc.app_network.id

ssh_keys = [var.ssh_key_fingerprint]

tags = ["monitoring-host", var.environment]

lifecycle {

create_before_destroy = true

prevent_destroy = false

}

}

# Managed PostgreSQL Database

resource "digitalocean_database_cluster" "app_db" {

name = "app-db-${var.environment}"

engine = "pg"

version = "14"

size = var.db_size

region = var.region

node_count = var.environment == "production" ? 3 : 1

maintenance_window {

day = "sunday"

hour = "02:00:00"

}

}

# Database Firewall Rules

resource "digitalocean_database_firewall" "app_db_fw" {

cluster_id = digitalocean_database_cluster.app_db.id

# Allow access from App host

rule {

type = "droplet"

value = digitalocean_droplet.app_host.id

}

}

# Load Balancer for production environment

resource "digitalocean_loadbalancer" "app_lb" {

count = var.environment == "production" ? 1 : 0

name = "app-lb-${var.environment}"

region = var.region

vpc_uuid = digitalocean_vpc.app_network.id

forwarding_rule {

entry_port = 80

entry_protocol = "http"

target_port = 8080

target_protocol = "http"

}

healthcheck {

port = 8080

protocol = "http"

path = "/health"

}

droplet_ids = [digitalocean_droplet.app_host.id]

}

# Firewall rules for App host

resource "digitalocean_firewall" "app_firewall" {

name = "app-firewall-${var.environment}"

droplet_ids = [digitalocean_droplet.app_host.id]

inbound_rule {

protocol = "tcp"

port_range = "22"

source_addresses = ["0.0.0.0/0", "::/0"]

}

inbound_rule {

protocol = "tcp"

port_range = "8080"

source_addresses = ["0.0.0.0/0", "::/0"]

}

outbound_rule {

protocol = "tcp"

port_range = "1-65535"

source_addresses = ["0.0.0.0/0", "::/0"]

}

}

# Firewall rules for Monitoring host

resource "digitalocean_firewall" "monitoring_firewall" {

name = "monitoring-firewall-${var.environment}"

droplet_ids = [digitalocean_droplet.monitoring_host.id]

inbound_rule {

protocol = "tcp"

port_range = "22"

source_addresses = ["0.0.0.0/0", "::/0"]

}

inbound_rule {

protocol = "tcp"

port_range = "3000" # Grafana

source_addresses = ["0.0.0.0/0", "::/0"] # Allow connections from anywhere

}

inbound_rule {

protocol = "tcp"

port_range = "9090" # Prometheus

source_addresses = [digitalocean_vpc.app_network.ip_range] # Only allow connections from VPC

}

outbound_rule {

protocol = "tcp"

port_range = "1-65535"

source_addresses = ["0.0.0.0/0", "::/0"]

}

}

terraform/variables.tf

variable "do_token" {

description = "DigitalOcean API Token with write access"

type = string

sensitive = true

}

variable "region" {

description = "DigitalOcean region for resource deployment"

type = string

default = "nyc1"

validation {

condition = can(regex("^[a-z]{3}[1-3]$", var.region))

error_message = "Region must be a valid DigitalOcean region code (e.g., nyc1, sfo2, etc.)"

}

}

variable "environment" {

description = "Environment name (staging/production)"

type = string

default = "staging"

validation {

condition = contains(["staging", "production"], var.environment)

error_message = "Environment must be either 'staging' or 'production'"

}

}

variable "ssh_key_fingerprint" {

description = "SSH key fingerprint for Droplet access"

type = string

}

variable "app_droplet_size" {

description = "Size of the App host droplet"

type = string

default = "s-1vcpu-1gb"

}

variable "monitoring_droplet_size" {

description = "Size of the Monitoring host droplet"

type = string

default = "s-1vcpu-1gb"

}

variable "db_size" {

description = "Size of the database cluster"

type = string

default = "db-s-1vcpu-1gb"

}

terraform/outputs.tf

output "app_host_ip" {

description = "Public IP address of the App host"

value = digitalocean_droplet.app_host.ipv4_address

}

output "monitoring_host_ip" {

description = "Public IP address of the Monitoring host"

value = digitalocean_droplet.monitoring_host.ipv4_address

}

output "load_balancer_ip" {

description = "Public IP address of the Load Balancer (production only)"

value = var.environment == "production" ? digitalocean_loadbalancer.app_lb[0].ip : null

}

output "database_host" {

description = "Database connection host"

value = digitalocean_database_cluster.app_db.host

sensitive = true

}

output "database_port" {

description = "Database connection port"

value = digitalocean_database_cluster.app_db.port

}

output "vpc_id" {

description = "ID of the created VPC"

value = digitalocean_vpc.app_network.id

}

With these 3 files, we have everything we need to properly deploy our app's infrastructure to DigitalOcean! Furthermore, we can easily re-use it for different environments (imagine you want a hosted development as well as the production environments).

Now let's look at some best practices when working with Terraform, and then we'll move on to implementing the required configuration of these infrastructures – installing dependencies, configuring our services, and more...

Terraform Best Practices

- Resource Naming

- Use consistent naming conventions

- Include environment in resource names

- Use tags for better organization

- Security

- Store sensitive values in secret management systems

- Use least privilege access for service accounts

- Implement network security groups and firewall rules

- State Management

- Use remote state storage

- Enable state locking

- Implement state backup strategy

- Module Development

- Create reusable modules for common patterns

- Version your modules

- Document module inputs and outputs

- Configuration

- Use data sources when possible

- Implement proper error handling and field validation

- Use count and

for_eachfor resource iteration

- Lifecycle Management

- Implement proper destroy prevention

- Use

create_before_destroywhen appropriate - Plan for zero-downtime updates

Working with Terraform

Common commands:

# Initialize working directory

terraform init

# Plan changes

terraform plan -out=tfplan

# Apply changes

terraform apply tfplan

# Destroy infrastructure

terraform destroy

# Format configuration

terraform fmt

# Validate configuration

terraform validate

# Show current state

terraform show

Ansible's Core Concepts

Now let's look at Ansible, a powerful tool for configuring and automating your services and workflows!

Inventory

The inventory is Ansible's way of defining and organizing the hosts (servers) that it manages. It can be a simple static file listing IP addresses or hostnames, or a dynamic inventory script that pulls host information from cloud providers or other infrastructure systems. Inventories can group hosts together (e.g., "web_servers", "databases") and assign variables to specific hosts or groups, making it easier to manage different types of servers with different configurations.

Roles

Roles are reusable units of automation in Ansible that contain all the necessary tasks, variables, handlers, and files needed to configure a specific aspect of a system. They provide a way to organize complex automation tasks into modular components that can be easily shared and reused across different projects. For example, you might have a "docker" role that handles Docker installation and configuration, or a "nginx" role that sets up and configures the Nginx web server.

Role Organization

Each role follows a standardized structure:

roles/role_name/

├── defaults/ # Default variables (lowest precedence)

│ └── main.yml

├── vars/ # Role variables (higher precedence)

│ └── main.yml

├── tasks/ # Task definitions

│ └── main.yml

├── handlers/ # Event handlers

│ └── main.yml

├── templates/ # Jinja2 templates

│ └── config.j2

└── meta/ # Role metadata and dependencies

└── main.yml

Group Variables

Group variables (stored in group_vars directory) provide a way to set variables that apply to specific groups of hosts defined in your inventory. These variables can include configuration settings, credentials, or any other data needed for automation tasks. For example, you might have different database credentials for production and staging environments, stored in their respective group_vars files.

Playbooks

Playbooks are Ansible's configuration, deployment, and orchestration language. They are YAML files that describe the desired state of your systems and the steps needed to get there. A playbook contains one or more "plays," each defining a set of hosts to configure and the tasks to run on those hosts.

Tasks can be executed directly or organized into roles, and playbooks can include variables, handlers, and other Ansible features to create sophisticated automation workflows.

Ansible Config (ansible.cfg)

The ansible.cfg file is Ansible's configuration file that sets various default behaviors and settings for Ansible operations. It can specify default locations for roles, inventory files, and SSH keys, set privilege escalation settings, configure connection types and timeouts, and modify various other behavioral aspects of Ansible.

While Ansible will work without this file using built-in defaults, customizing ansible.cfg allows you to tailor Ansible's behavior to your specific needs and environment.

Ansible Implementation

Let's start to build our Ansible configuration! We'll look at each file individually, and then at how we can apply these configurations to our DigitalOcean droplets.

1. Application Role (roles/app)

roles/app/handlers/main.yml

- name: restart goapp

systemd:

name: goapp

state: restarted

daemon_reload: yesroles/app/tasks/main.yml

- name: Install Go

block:

- name: Download Go

get_url:

url: "https://go.dev/dl/go{{ go_version }}.linux-amd64.tar.gz"

dest: /tmp/go.tar.gz

- name: Extract Go

unarchive:

src: /tmp/go.tar.gz

dest: /usr/local

remote_src: yes

- name: Add Go to PATH

copy:

dest: /etc/profile.d/go.sh

content: |

export PATH=$PATH:/usr/local/go/bin

mode: '0644'

- name: Install git

apt:

name: git

state: present

- name: Create app group

group:

name: "{{ app_group }}"

state: present

system: yes

- name: Create app user

user:

name: "{{ app_user }}"

group: "{{ app_group }}"

system: yes

create_home: yes

shell: /bin/bash

- name: Create app directory

file:

path: "{{ app_dir }}"

state: directory

owner: "{{ app_user }}"

group: "{{ app_group }}"

mode: '0755'

- name: Clone/update application repository

git:

repo: "https://github.com/jpereiramp/52-weeks-of-sre-backend.git"

dest: "{{ app_dir }}"

force: yes

become: yes

become_user: "{{ app_user }}"

- name: Install application dependencies

shell: |

. /etc/profile.d/go.sh

go mod download

args:

chdir: "{{ app_dir }}"

become: yes

become_user: "{{ app_user }}"

- name: Run database migrations

shell: |

. /etc/profile.d/go.sh

go run main.go migrate

args:

chdir: "{{ app_dir }}"

become: yes

become_user: "{{ app_user }}"

environment: "{{ app_env }}"

register: migration_output

- name: Create systemd service

template:

src: app.service.j2

dest: /etc/systemd/system/goapp.service

mode: '0644'

notify: restart goapp

- name: Enable and start goapp service

systemd:

name: goapp

state: started

enabled: yes

daemon_reload: yes

roles/app/templates/app.service.j2

[Unit]

Description=Go Application

After=network.target

[Service]

Type=simple

User={{ app_user }}

Group={{ app_group }}

WorkingDirectory={{ app_dir }}

Environment=PATH=/usr/local/go/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

Environment=GOPATH=/home/{{ app_user }}/go

{% for key, value in app_env.items() %}

Environment={{ key }}={{ value }}

{% endfor %}

ExecStart=/usr/local/go/bin/go run main.go serve

Restart=always

RestartSec=3

[Install]

WantedBy=multi-user.target2. Monitoring Role (roles/monitoring)

roles/monitoring/handlers/main.yml

- name: restart monitoring stack

community.docker.docker_compose:

project_src: /opt/monitoring

state: present

restarted: yesroles/monitoring/tasks/main.yml

---

- name: Install Python dependencies

apt:

name:

- python3-pip

- python3-setuptools

state: present

- name: Install Docker

block:

- name: Add Docker GPG key

apt_key:

url: https://download.docker.com/linux/ubuntu/gpg

state: present

- name: Add Docker repository

apt_repository:

repo: deb [arch=amd64] https://download.docker.com/linux/ubuntu {{ ansible_distribution_release }} stable

state: present

- name: Install Docker packages

apt:

name:

- docker-ce

- docker-ce-cli

- containerd.io

state: present

- name: Install Docker Python package

pip:

name:

- docker==6.1.3

- urllib3<2.0

state: present

- name: Ensure Docker service is running

service:

name: docker

state: started

enabled: yes

- name: Add users to docker group

user:

name: "{{ item }}"

groups: docker

append: yes

loop: "{{ docker_users }}"

notify: restart docker

- name: Create monitoring directories

file:

path: "{{ item }}"

state: directory

mode: '0755'

loop:

- /opt/monitoring

- /opt/monitoring/prometheus

- /opt/monitoring/prometheus/rules

- /opt/monitoring/grafana

- /opt/monitoring/grafana/dashboards

- /opt/monitoring/grafana/alerts

- /opt/monitoring/grafana/provisioning/dashboards

- /opt/monitoring/grafana/provisioning/datasources

- /opt/monitoring/grafana/provisioning/alerting

- name: Copy Prometheus rules

copy:

src: "{{ playbook_dir }}/../config/prometheus/login_slo_rules.yaml"

dest: "/opt/monitoring/prometheus/rules/login_slo_rules.yaml"

mode: '0644'

notify: restart monitoring stack

- name: Configure Prometheus

template:

src: prometheus.yml.j2

dest: /opt/monitoring/prometheus/prometheus.yml

mode: '0644'

notify: restart monitoring stack

- name: Copy Grafana dashboards

copy:

src: "{{ playbook_dir }}/../config/grafana/dashboards/"

dest: "/opt/monitoring/grafana/dashboards/"

mode: '0644'

- name: Copy Grafana alert rules

copy:

src: "{{ playbook_dir }}/../config/grafana/alerts/login_errors_alert.json"

dest: "/opt/monitoring/grafana/provisioning/alerting/rules.json"

mode: '0644'

- name: Configure Grafana dashboard provisioning

copy:

content: |

apiVersion: 1

providers:

- name: 'default'

orgId: 1

folder: ''

type: file

disableDeletion: false

updateIntervalSeconds: 10

allowUiUpdates: true

options:

path: /var/lib/grafana/dashboards

dest: /opt/monitoring/grafana/provisioning/dashboards/default.yaml

mode: '0644'

- name: Configure Grafana datasource

template:

src: datasource.yml.j2

dest: /opt/monitoring/grafana/provisioning/datasources/datasource.yml

mode: '0644'

- name: Deploy Docker Compose file

template:

src: docker-compose.yml.j2

dest: /opt/monitoring/docker-compose.yml

mode: '0644'

- name: Ensure Docker socket has correct permissions

file:

path: /var/run/docker.sock

mode: '0666'

- name: Clean existing containers

shell: |

if [ -f docker-compose.yml ]; then

docker compose down -v

fi

args:

chdir: /opt/monitoring

- name: Start monitoring stack

command: docker compose up -d

args:

chdir: /opt/monitoring

register: compose_output

changed_when: compose_output.stdout != ""roles/monitoring/grafana/templates/datasource.yml.j2

apiVersion: 1

datasources:

- name: Prometheus

uid: prometheus-default

type: prometheus

access: proxy

url: http://prometheus:9090

isDefault: true

roles/monitoring/grafana/templates/docker-compose.yml.j2

version: '3.7'

services:

prometheus:

image: prom/prometheus:latest

volumes:

- ./prometheus/prometheus.yml:/etc/prometheus/prometheus.yml:ro

- ./prometheus/rules:/etc/prometheus/rules:ro

- prometheus_data:/prometheus

command:

- '--config.file=/etc/prometheus/prometheus.yml'

- '--storage.tsdb.retention.time={{ prometheus_retention_time }}'

- '--web.enable-lifecycle'

ports:

- "9090:9090"

restart: unless-stopped

user: "65534:65534" # nobody:nogroup

grafana:

image: grafana/grafana:latest

volumes:

- ./grafana/provisioning:/etc/grafana/provisioning:ro

- ./grafana/dashboards:/var/lib/grafana/dashboards:ro

- grafana_data:/var/lib/grafana

environment:

- GF_SECURITY_ADMIN_PASSWORD={{ grafana_admin_password }}

- GF_DASHBOARDS_DEFAULT_HOME_DASHBOARD_PATH=/var/lib/grafana/dashboards/golden_signals_dashboard.json

ports:

- "3000:3000"

depends_on:

- prometheus

restart: unless-stopped

user: "472:472" # grafana:grafana

volumes:

prometheus_data:

driver: local

grafana_data:

driver: localroles/monitoring/grafana/templates/prometheus.yml.j2

global:

scrape_interval: 15s

evaluation_interval: 15s

rule_files:

- "rules/*.yaml"

scrape_configs:

- job_name: 'go-service'

static_configs:

- targets: ['{{ hostvars["app-server"]["ansible_host"] }}:8080']

metrics_path: '/metrics'

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']3. Commons Role (roles/common)

roles/common/tasks/main.yml

---

- name: Update apt cache

apt:

update_cache: yes

cache_valid_time: 3600

- name: Install common packages

apt:

name:

- apt-transport-https

- ca-certificates

- curl

- software-properties-common

- python3-pip

- python3-setuptools

- python3-wheel

state: present

4. Group Vars

group_vars/all/vars.yml

# System Configuration

timezone: UTC

# SSH Configuration

ssh_port: 22

group_vars/all/vault.yml(Encrypted)

# SSL Certificates

ssl_private_key: |

-----BEGIN OPENSSH PRIVATE KEY-----

YOUR-KEY-HERE

-----END OPENSSH PRIVATE KEY-----group_vars/app/vars.yml

go_version: "1.23.3"

app_user: goapp

app_group: goapp

app_dir: /opt/goapp

app_env:

GIN_MODE: "release"

PORT: 8080

DB_DSN: "postgres://{{ db_user }}:{{ db_password }}@{{ db_host }}:{{ db_port }}/{{ db_name }}"group_vars/app/vault.yml

# Database Credentials

db_user: doadmin

db_password: supersecret123group_vars/monitoring/vars.yml

prometheus_retention_time: "15d"

docker_users:

- rootgroup_vars/monitoring/vault.yml

grafana_admin_password: changeme5. Inventory

inventory/staging/hosts.yml

---

all:

children:

app:

hosts:

app-server:

ansible_host: "{{ app_server_ip }}"

ansible_user: root # Explicitly set the user

ansible_ssh_private_key_file: "~/.ssh/id_ed25519"

monitoring:

hosts:

monitoring-server:

ansible_host: "{{ monitoring_server_ip }}"

ansible_user: root # Explicitly set the user

ansible_ssh_private_key_file: "~/.ssh/id_ed25519"6. Core Files

site.yml

- name: Configure common settings for all servers

hosts: all

become: true

roles:

- common

- name: Configure Go application server

hosts: app

become: true

roles:

- app

- name: Configure monitoring server

hosts: monitoring

become: true

roles:

- monitoringrequirements.yml(External Dependencies)

---

collections:

- name: community.docker

version: "3.4.0"

- name: community.general

version: "7.0.0"ansible.cfg

[defaults]

inventory = inventory/staging/hosts.yml

roles_path = roles

host_key_checking = False

remote_user = root

private_key_file = ~/.ssh/id_ed25519

# Performance tuning

forks = 20

gathering = smart

fact_caching = jsonfile

fact_caching_connection = /tmp/ansible_fact_cache

fact_caching_timeout = 7200

# Logging

log_path = logs/ansible.log

callback_whitelist = profile_tasks

[privilege_escalation]

become = True

become_method = sudo

become_user = root

become_ask_pass = False

[ssh_connection]

pipelining = True

control_path = /tmp/ansible-ssh-%%h-%%p-%%rUsage Examples

# Install dependencies

ansible-galaxy install -r requirements.yml

# Run playbook (staging)

ansible-playbook -i inventory/staging site.yml

# Run specific roles

ansible-playbook -i inventory/staging site.yml --tags "docker,app"

# Validate syntax

ansible-playbook -i inventory/staging site.yml --syntax-check

# Dry-run

ansible-playbook -i inventory/staging site.yml --checkBest Practices

- Inventory Management

- Use separate inventory files for different environments

- Group hosts logically

- Use

group_varsfor environment-specific variables

- Role Development

- Keep roles focused and single-purpose

- Use meaningful tags for selective execution

- Implement proper handlers for service management

- Use

defaults/main.ymlfor configurable variables - Document role variables and dependencies

- Security

- Use

ansible-vaultfor encrypting sensitive data - Implement proper file permissions

- Use least privilege principle

- Regularly update dependencies

- Use

- Task Design

- Make tasks idempotent

- Use meaningful names for tasks

- Include proper error handling

- Use handlers for service management

- Implement proper checks and validations

- Variable Management

- Use meaningful variable names

- Define default values

- Document variable purposes

- Use proper variable precedence

- Configuration Templates

- Use Jinja2 templates for configuration files

- Include proper error handling

- Document template variables

- Use consistent formatting

Additional Considerations

- Version Control

- Keep all Ansible files in version control

- Use

.gitignorefor sensitive files - Tag releases for different versions

- Secret Management

- Use

ansible-vaultfor sensitive data - Consider external secret management systems

- Rotate secrets regularly

- Use

- Documentation

- Document role requirements

- Keep

READMEfiles updated - Document variable precedence

- Testing

- Use Molecule for role testing

- Implement CI/CD pipelines

- Test in staging before production

- Backup Strategy

- Backup Ansible configuration

- Backup inventory data

- Document recovery procedures

Deploying our Infrastructure

Now that we have all of our Infrastructure defined as code, and their initial configurations all setup, let's work on our final step: deploying it all to DigitalOcean. We'll work on a staging environment, and on upcoming series we'll work on creating a new, production-ready, prod environment.

As always, the full source code we worked on throughout this article is available at the "52 Weeks of SRE" Repository.

Before being able to deploy your infrastructure, you'll first need to create a DigitalOcean account, and then you must setup an SSH Key as well as a Personal Access Token (PAT). Checkout these how-to guides:

- Configure required Variables

- Create a

terraform/terraform.tfvarsfile with your DigitalOcean credentials

environment = "staging"

do_token = "dop_v1_xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx" # Your DigitalOcean PAT- Create a

ansible/.vault_passfile with a secret password for encrypting Ansible's sensitive data - Update Terraform specs with desired values (e.g. region, instance size)

- Install Ansible Dependencies

ansible-galaxy install -r requirements.yml- Initialize Terraform

# Initialize Terraform

cd terraform

terraform init -var-file=terraform.tfvars

- Deploy Infrastructure

# Deploy with Terraform

terraform apply

# Export variables for Ansible

export APP_HOST=$(terraform output -raw app_host_ip)

export MONITORING_HOST=$(terraform output -raw monitoring_host_ip)

export DB_HOST=$(terraform output -raw database_host)

export DB_PORT=$(terraform output -raw database_port)

- Run Ansible Playbook

cd ../ansible

# Run Playbook

ansible-playbook site.yml \

-e "app_server_ip=$APP_HOST" \

-e "monitoring_server_ip=$MONITORING_HOST" \

-e "db_host=$DB_HOST" \

-e "db_port=$DB_PORT" \

-e "db_name=defaultdb"

Testing your Setup

- Access Grafana

- Navigate to

http://<monitoring-droplet-ip>:3000 - Login with

adminas username and your configured password

- Navigate to

- Access Prometheus

- Navigate to

http://<monitoring-droplet-ip>:9090 - Verify that targets are being scraped successfully

- Navigate to

- Verify Application Metrics

- Check

http://<app-droplet-ip>/metricsendpoint is exposed by your Go application - Verify Prometheus is successfully scraping the endpoint

- Import recommended Go application dashboards in Grafana

- Check

Maintenance and Updates

- Monitoring Updates

- Regular updates to Prometheus and Grafana configurations

- Dashboard improvements based on needs

- Alert rule refinements

Application Updates

# Update application configuration

cd ansible

ansible-playbook -i inventory/hosts.yml site.yml --tags update

Infrastructure Updates

# Update infrastructure

cd terraform

terraform plan # Review changes

terraform apply # Apply changes

Best Practices

- Security

- Use environment variables for sensitive data

- Implement proper firewall rules

- Regular security updates

- Restrict access to monitoring endpoints

- Backup

- Regular database backups (handled by DigitalOcean)

- Backup Grafana dashboards

- Export and version control monitoring configurations

- Monitoring

- Set up alerting rules

- Regular review of metrics

- Dashboard optimization

- Capacity planning based on metrics

- Scaling

- Monitor resource usage

- Plan for horizontal scaling

- Regular performance optimization

Subscribe to ensure you don't miss next week's deep dive into basics of Linux for SRE. Your journey to mastering SRE continues! 🎯

If you enjoyed the content and would like to support a fellow engineer with some beers, click the button below :)